For developers, it could be a good way to boost their business or just a neat project to hone their coding skills.Įven if your work has nothing to do with web scraping, but you are a Python team player, at the end of this article, you will learn about a new niche where you can make great use of your skills. With the new spotlight shining on data extraction, companies are starting to see ways in which they can benefit. More and more businesses need an accurate marketing strategy, which implies vast amounts of information in a short amount of time. Web scraping and web scrapers hugely increased in popularity in the last decade especially. More data means more insights, so better decisions, and more money. If in the 20th century we dealt with a “time is money” mindset, now it’s all about data. If we open the wiki page of the book we will see the different information of the book enclosed in a table on the right side of the screen.Web Scraping with Python: The Ultimate Guide to Building Your Scraper We will first consider only one book, assume it’s the first one in the list. This is the most lengthy and important step. Step #3: Extract each book from the list and get the wiki link of each book rows = soup.find('table').find_all('tr') books_links = for row in rows] base_url = ' ' books_urls = Step #2: Feed that HTML to BeautifulSoup soup = bs(page)

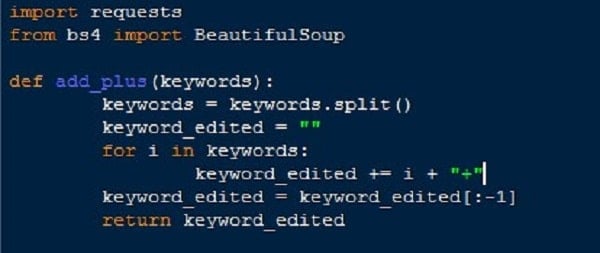

Creating a webscraper in python code#

Step #1: Fetch main URL HTML code url = ' ' page = rq.get(url).text Get all books data, clean, and plot final results.Extract each book from the list and get the wiki link of each book.So, to do this we need a couple of steps: We want our code to navigate the list, go to the book wiki page, extract info like genre, name, author, and publishing year and then store this info in a Python dictionary - you can store the data in a Pandas frame as well.

The wiki page contains links to each of the 100 books as well as their authors. Trying to see if we can find a relation between the genre and the list - which genre performed best. For example, you can scrap YouTube for video titles, but you can’t use the videos for commercial use because they are copyrighted.īut, we yet to write code that scraps different webpages.įor this section, we will scrap the wiki page with the best 100 books of all time, and then we will categorize these books based on their genre. One last thing, there’s a difference between publically available and copyrighted. In this case, this information is illegal to be scraped. Now, social media is somewhat complicated, because there are parts of it that are not publically available, such as when a user sets their information to be private. So, information on Wikipedia, social media or Google’s search results are examples of publically available data. Publically available information is the information that anyone can see/ find on the internet without the need for special access. You might wonder, what does publically available mean? The more detailed answer, scraping publically available data for non-commercial purposes was announced to be completely legal in late January 2020. The most important question when it comes to web scraping, is it legal? Is web scraping legal?

Web Scraping is the technique used by programmers to automate the process of finding and extracting data from the internet within a relatively short time. It’s easy to write code to extract data from a 100 webpage than do them by hand. That’s why most data scientists and developers go with web scraping using code. Needless to say, that will be quite a tedious task.

0 kommentar(er)

0 kommentar(er)